ML Models as Web-end points

Ready to deploy your machine learning model and can't decide where to start? Coming to the rescue is our article on ML Models as Web-end points to help you you get started

Taking Machine learning models to production is the ultimate aim. Making a software system available for use is called deployment. Scalability is the property of the system to handle a growing amount of work by adding resources to the system.

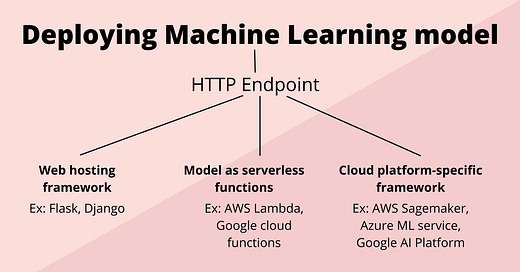

More often than not, the problem of Model serving is soon visited by the challenge of making the deployment scalable. The deployment can be done as

Web Endpoints

Serverless functions

In this post, we will be discussing:

Introduction - Model as web endpoints

Model persistence

Deploying web endpoints

Deployment options in Flask

Heroku

HTTP servers: WSGI and Gunicorn

We had published a blog on ML Models are Serverless Functions last week at Cafe IO where our focus was on understanding Models as serverless functions, gaining insights on two famous Serverless functions: GCP and Lambda and on comparing PaaS and Serverless functions.

Link to the article: ML Models are Serverless Functions

Deploying Machine Learning Model - A Schematic View

If you like our writing, do encourage us by Subscribing and Sharing

Introduction - Models as web endpoints

A model endpoint is a setup that provides you with an output when you supply it with a set of parameters or inputs. The web endpoint then takes the input parameters and provides the output from the ML model. This system helps to provide real-time results and can turn out to be useful for end-users.

A Web-end Point simply means a web service which can be an URL where users can have the access to a specific service.

After you have designed your model and specified the requirements, it is time to take your model to the world and reach to your users. You have an option to precompute the results and show them to your users. However, you would like your model to work with real-time data which can be made possible by setting up your predictive Machine Learning web endpoint so that it can be consumed by your users.

There are various architectures and protocols that can help you with creating web endpoints and establish communication between different computer systems. A few of them have been discussed below:

REST

REST, or REpresentational State Transfer, is an architectural style for providing standards between computer systems on the web.

In simplest words, in the REST architectural style, data and functionality are considered resources and are accessed using Uniform Resource Identifiers (URIs). The resources are acted upon by using a set of simple, well-defined operations. The clients and servers exchange representations of resources by using a standardized interface and protocol – typically HTTP.

RESTful API is an architectural style for an API following the principles of REST. When a user makes a request to the RESTful API, the request is transferred to the end-point and the output is delivered in form of JSON or HTML or Python or PHP. A very popular deployment option in Python: Flask has an extension, Flask RESTful API that helps to build REST APIs.

gRPC

gRPC is a modern open source high performance Remote Procedure Call (RPC) framework that can run in any environment. It can efficiently connect services in and across data centers with pluggable support for load balancing, tracing, health checking and authentication.

— gRPC.io

gRPC is based around the idea of defining a service, specifying the methods that can be called remotely with their parameters and return types. On the server-side, the server implements this interface and runs a gRPC server to handle client calls.

On the client-side, the client has a stub (referred to as just a client in some languages) that provides the same methods as the server. The latest Google APIs will have gRPC versions of their interfaces, letting you easily build Google functionality into your application. It also allows working in Protocol Buffers.

SOAP

SOAP is an acronym for Simple Object Access Protocol. It is an XML-based messaging protocol for exchanging information among computers. SOAP is an application of the XML specification. SOAP can extend HTTP for XML messaging. It enables client applications to easily connect to remote services and invoke remote methods.

Model Persistence

When it comes to model serving, we have two options:

Train the model within the web service application

Use a pre-trained model

The first option will introduce higher latency in the service and will be computationally expensive. The second option is more useful.

Model persistence is a term used for saving and loading models within a predictive model pipeline. In order to preserve the model for future use without having to retrain it has to be saved. Pickle object is commonly used in Flask. Pickling is converting the python object into a byte stream viz not human-readable and unpickling is the reverse process where the python object is unwrapped. During the Process, attributes are maintained in the same state throughout.

Creating web endpoint

The creation of a web endpoint can be done by using various services. Two very popular frameworks that can be considered are:

Flask

FastAPI

Plumber

Tensorflow Serving

Flask

Flask is an easy-to-use framework that has a built-in development server and debugger. It is very common for prototyping Machine Learning Models as web endpoints. It has integrated unit testing support with restful request dispatching plus it is extensively documented. Flask provides a local server, but you will be requiring a production-level server like WSGI that will help in routing and load balancing.

The Flask application object itself is the actual WSGI application.

What is WSGI?

WSGI stands for Web Server Gateway Interface. It is a specification that describes how a web server communicates with web applications, and how web applications can be chained together to process one request. WSGI is not a software, library or a framework

A short demo of Flask

| from flask import Flask | |

| app = Flask(__name__) | |

| @app.route("/") | |

| def hello(): | |

| return "Hi! This is a Sample demo of Flask" | |

| if __name__ == '__main__': | |

| app.run(debug=True) |

The result in browser looks like (5000 is the default port number):

Below is an example for Iris Flower Classification on Flask:

| from flask import Flask, request, jsonify | |

| import numpy as np | |

| import pickle | |

| import os | |

| os.chdir('C:/Users/bhosl/Python') | |

| model = pickle.load(open('iris_model','rb')) | |

| app = Flask(__name__) | |

| @app.route('/api',methods=['POST', 'GET']) | |

| def predict(): | |

| data = request.get_json(force=True) | |

| predict_request=[[data["sepal_length"],data["sepal_width"],data["petal_length"],data["petal_width"]]] | |

| req=np.array(predict_request) | |

| print(req) | |

| prediction = model.predict(predict_request) | |

| pred = prediction[0] | |

| print(pred) | |

| return jsonify(int(pred)) | |

| if __name__ == '__main__': | |

| app.run(port=5000, debug=True) |

For results, we can give our input through a client-side

| import requests | |

| import json | |

| r = requests.post('http://localhost:5000/api', json={"sepal_length":3.2, | |

| "sepal_width":7.3, | |

| "petal_length":4.5, | |

| "petal_width":2.1}) | |

| r.status_code | |

| print(r) |

Dash is helpful in developing flask endpoints with ease.

Dash is a productive Python framework for building web analytic applications. Written on top of Flask, Plotly.js, and React.js, Dash is ideal for building data visualization apps with highly custom user interfaces in pure Python. It's particularly suited for anyone who works with data in Python. On running a simple .py script with Machine Learning combined with a web layout design generates a dynamic web page.

Fast API

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.6+ based on standard Python type hints.

— FastAPI

FastAPI helps in the seamless building of API in python. It enhances code maintainability by separating the server code from the business logic. The biggest advantage of using Fast API over Flask is that it is super fast. It is built on ASGI (Asynchronous Server Gateway Interface) as compared to the flask which uses WSGI.

It also creates an impressive GUI (Graphical User Interface) which is missing in Flask. It creates documentation on the go when you are developing the API. This feature comes in handy when you are collaborating on a project with other teammates.

It does all these things OpenAI specifications and Swagger for implementing these specifications. Being a developer, you are only focusing on the logic building part and the rest of the things are managed by the FastAPI.

Plumber

Plumber is an open-source R package that can help you convert your R models and programs as web endpoints. It is also based on REST API, like Flask and Fast API. It can be used to embed a graph created in R on a website or to run a forecast on some data from a Java application.

Tensorflow Serving

This helps to create an API in both REST and gRPC formats. It helps to deploy new algorithms and experiments while keeping the same server architecture. It is helpful in integrating Tensorflow models but it can be extended to serve other model types also.

Deployment options for web endpoints

After you have created a web endpoint using the services listed above, the next step is to deploy the web endpoint. Both the frameworks, flask and fast API come with a built-in server which is good to run on your local machine but cannot be used in a production environment. To get your Machine Learning model to production, there are a couple of options:

Heroku: Heroku is a container-based cloud Platform as a Service (PaaS). Developers use Heroku to deploy, manage, and scale modern apps. It is elegant, flexible, and easy to use, offering developers the simplest path to getting their apps to market.

Google App Engine: Google App Engine allows app developers to build scalable web and mobile back ends in any programming language on a fully managed serverless platform.

AWS Elastic Beanstalk: It is an orchestration service offered by Amazon Web Services for deploying and scaling web applications and services.

Azure (IIS): Internet Information Services (IIS) on Azure is a solution to help businesses host websites and applications securely on the cloud.

Virtual Machine on Cloud: Python Scripts can be deployed in a Linux Virtual Machine on top of Google Cloud Platform or AWS

Containers: A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another

Conclusion

Python provides a conducive environment with a variety of options to deploy and serve the Machine Learning model and enable real-time usage by building web applications. Python helps to create scalable APIs that can be deployed on the open web and helps to create custom UI applications on top of backend frameworks like Flask.

We talked about Web-end points, their various tools and deployment options that you can look at. Hope you have got an understanding of the various web endpoints for your Machine Learning Models.

Read more: ML Models as Serverless Functions

Have a Nice Day and Subscribe to Cafe and Engineering if you haven’t already subscribed.