ML Model deployment in Containers: Part 2

Ready to deploy your machine learning model and can't choose from the options? Coming to the rescue is our article on ML Model deployment in Containers to help you navigate Kubernetes!

Taking Machine learning models to production is the ultimate aim. Making a software system available for use is called deployment. Scalability is the property of the system to handle a growing amount of work by adding resources to the system.

At Cafe I/O, we have been publishing content on various options to deploy your Machine Learning model. We have already discussed:

This is Part 1 of our series on ML Model deployment in Containers. Links to all the parts:

In part 1 of our series on ML model deployment in Containers, we introduced containers, compared virtualization and containerization, discussed Docker and Container orchestration.

This post, Part 2 will cover:

Docker setup

Basic commands in Docker

Hands-on: Deploying ML Model on Docker

Container Orchestration

Conclusion

If you like our writing, do encourage us by Subscribing and Sharing

Docker setup

To get your Docker up and running, first install Docker on your system. Depending on your Operating System, you can install and run Docker in your machine from this link: Install Docker

After you have installed it, open the Docker application, create your Docker ID and log in using the details. That’s it! Your Docker is ready to use.

Basic commands in Docker

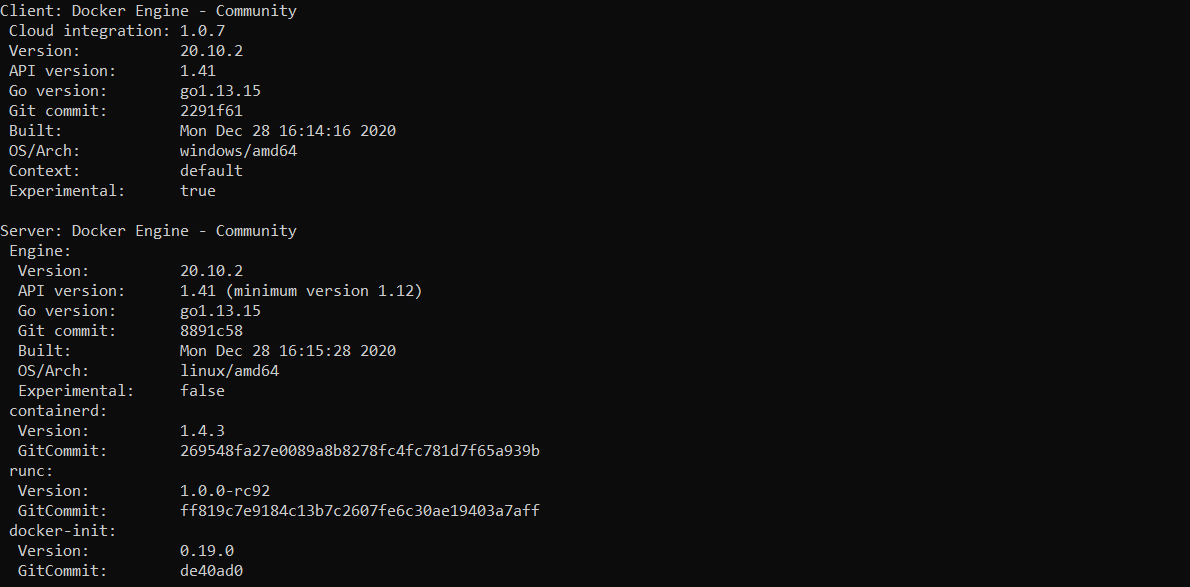

To check the version of Docker in your machine and to ensure everything is running smoothly, run the following command in your command prompt:

docker versionYour output will look something like this:

After the preliminary examination of finding the Docker version, we will move on to run the customary “Hello World” on our Docker.

docker run hello-worldYou will get an output like this:

There are three steps taking place simultaneously:

Docker daemon first tries to find the image locally.

It pulls the image from the registry.

Docker daemon runs the image.

This is how we can pull images from the registry and then operate on it. Hello-World is a very simple image. We can try and pull another image with which we can discuss various operations you can perform on the pulled image.

docker pull ubuntuUbuntu is a very popular image pulled from the Docker registry. The image Ubuntu has 3 layers which are visible in the form of alphanumeric codes.

Now that we have the image, we can run the container:

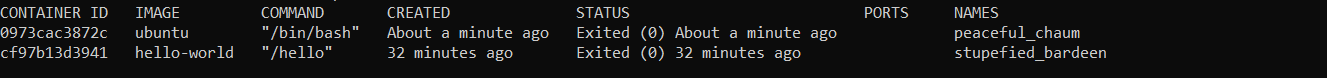

docker run ubuntuThe container will be run. You can see the containers on your system by running the command:

docker ps -aThe image contains the following information:

CONTAINER ID: this shows the unique ID of each container

IMAGE: the image from which the container is created

COMMAND: command executed in the container while starting it

CREATED: the time the container was created

STATUS: the current status of the container

PORTS: if any of the container ports is connected to the host machine, it will be displayed here

NAMES: this is the name of a container. If it is not provided while creating the container, Docker provides a unique name by default.

Now that we have understood how to pull an image, get the details, let’s understand how you can deploy your ML Model on Docker

Hands-on: Deploying ML Model on Docker

Last week we got an introduction to Containers, Docker and Container orchestration. It is time to get hands-on practice on how to deploy your Machine Learning model on Docker. We will go step by step:

First, let’s create a very simple Machine Learning model and save it as a pickle file in our system.

| from sklearn.datasets import load_iris | |

| from sklearn.model_selection import train_test_split | |

| from sklearn.neighbors import KNeighborsClassifier | |

| import numpy as np | |

| import pickle | |

| iris_dataset = load_iris() | |

| X_train, X_test, y_train, y_test = train_test_split(iris_dataset['data'], iris_dataset['target'], random_state=0) | |

| knn = KNeighborsClassifier(n_neighbors=1) | |

| knn.fit(X_train, y_train) | |

| X_new = np.array([[5, 2.9, 1, 0.2]]) | |

| prediction = knn.predict(X_new) | |

| y_pred = knn.predict(X_test) | |

| filename = 'iris_classification.pkl' | |

| pickle.dump(knn, open(filename, 'wb')) |

The model above is the Iris Flower classification problem which we all have done when we started our journey in Machine Learning. We have saved the model as a pickle file in our system that we shall be using later on.

The next step is to create a web endpoint using Flask. For a more detailed tutorial, you can visit this article:

Your final flask application will look something like this:

After this, you need to download Docker Desktop, create a Docker ID and start working on your Docker File.

After this create a docker file in the flask. You need not provide any extension to the file.

| FROM python:3.7 | |

| RUN pip install virtualenv | |

| ENV VIRTUAL_ENV=/venv | |

| RUN virtualenv venv -p python3 | |

| ENV PATH="VIRTUAL_ENV/bin:$PATH" | |

| WORKDIR /app | |

| ADD . /app | |

| # to install dependencies | |

| RUN pip install -r requirements.txt | |

| # to expose port | |

| EXPOSE 5000 | |

| # to run the application: | |

| CMD ["python", "app.py"] |

After this, create a repository on Microsoft Azure as we will be needing a registry. You can choose the AWS registry also. After creating the registry, you can create the Docker image by running this command in the folder which contains your project file:

docker build -t srtpan.azurecr.io/iris-classification:latestDecoding the command we have written above:

srtpan.azurecr.io is the name of the registry that you get when you create a resource on the Azure portal.

iris-classification is the name of the image and latest is the tag. This can be anything you want.

After this you can push the code to the registry with:

docker push srtpan.azurecr.io/iris-classification:latest Now you can access your application on the Azure portal.

Congratulations on deploying your ML Model on docker!

Container orchestration

If you have heard about Docker, you must have also heard about Kubernetes. We are gradually moving towards Kubernetes and we will understand that in detail in part 3.

For now, let us walk through Container orchestration.

Container orchestration automates the deployment, management, scaling, and networking of containers. Container orchestration can be used in any environment where you use containers. It can help you to deploy the same application across different environments without needing to redesign it.

— Redhat

Container orchestration can be used to automate and manage tasks like

Provisioning and deployment

Configuration and scheduling

Resource allocation

Container availability

Scaling or removing containers based on balancing workloads across your infrastructure

Load balancing and traffic routing

Monitoring container health

Configuring applications based on the container in which they will run

Keeping interactions between containers secure

Conclusion

Containers hold the power to change how effective machine learning models are used by end-users by deploying them safely and making them fast. They also help in resource optimization. To acquaint you with containers, we introduced a series on ML model deployment in Containers

In part 1 of our series, we introduced containers, compared virtualization and containerization discussed Docker and Container orchestration.

In part 2, we saw the hands-on deployment of an ML Model on Docker and understood Container Orchestration.

In part 3, we will dive deeper into Kubernetes.

Do share, subscribe and support Coffee and Engineering!